What do I do about college? Is school worth it? Is everyone just going to be a vibe coder?

Nate, how can I show what I’m good at in a world where resumes are all AI? I have skills and no one will pay attention!

I get these a lot. And it comes down to something really fundamental: we based our economy for a long time on knowledge, and knowledge is an inflationary currency.

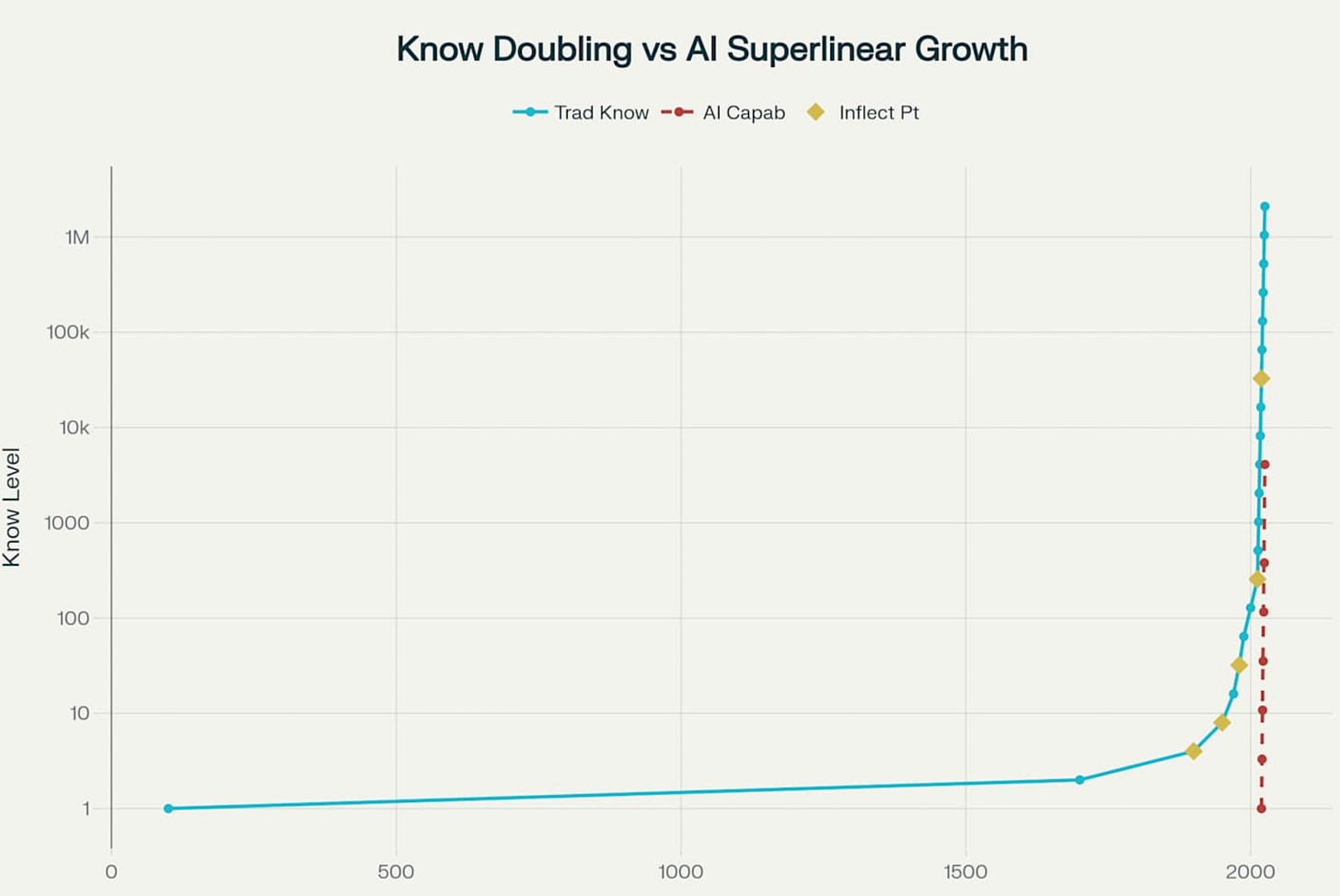

In fact, knowledge has been hyper-inflating recently.

For us humans, the question is simple: in a world where the currency we’ve used to articulate meaning is up for grabs, what do we do?

This stuff tends to get whispered about, shouted about (doomer style) and tends to not be discussed very thoughtfully. I want to dig a bit deeper here. I want to first look at the differentiation between jobs and skills more—I’ve referenced it but not unpacked it in detail before.

Then I want to respond to two of the biggest elephants in the room directly: the question of AGI and the question of college. They’re entangled, but I lay out a response to both, and I think both really rest on this knowledge economy questions, so it makes sense to address them here.

This free post is available to all subscribers, and is an example of the kind of thing paid subscribers get daily.

I’ll be sending my inaugural weekly exec brief to members of the AI Exec Circle this coming Sunday morning. If you’d like to receive it, you can change your plan here.

The audio for this post:

The Meaning Collapse

There's a radiologist in Cleveland right now staring at an AI system that reads scans better than she does. She's not worried about her job—demand for radiologists is actually increasing. But something darker gnaws at her: if a machine can do in seconds what took her a decade to master, what exactly is she?

This isn't a story about jobs. It's about the collapse of knowledge as the fundamental currency of human worth—and the reorganization of meaning that follows. Knowledge is going to keep growing at break-neck pace, but what about us? That’s what we all wonder, and I want to lay out a structured way to think about it head-on here.

Knowledge hyperinflation

We're experiencing the first knowledge hyperinflation in human history. Not the gentle devaluation that came with printing presses or calculators, but a complete collapse of the economic and social value of knowing things. Your MBA, your decades of experience, your painstakingly acquired expertise—they're Weimar Republic Deutschmarks, worthless before the ink dries on your certificate.

This isn't new. Knowledge has been inflating for decades—each generation needing more education for the same economic position their parents held. But AI represents the moment inflation tips into hyperinflation. When anyone can access all human knowledge instantly and synthesize it perfectly, what happens to the social order built on information scarcity? The same thing that happens to any currency when supply goes infinite: complete systemic collapse.

Andrew Peterson's research at the University of Poitiers names it "knowledge collapse"—when AI systems generate outputs clustered around probability centers, progressively narrowing the spectrum of available knowledge. His models show that discounting AI content by just 20% causes public beliefs to drift 2.3 times further from truth. We're not just devaluing knowledge; we're homogenizing it.

Jobs aren’t skills…

Here's where every automation attempt goes wrong, from Roger Smith's GM to today's AI evangelists: they decompose jobs into discrete skills, see machines master those skills, then assume the job itself can be automated. This reductionist view misses everything that makes human work valuable.

A lawyer isn't just someone who knows precedents—that's the skill. The job involves reading a room, sensing when a client is lying, knowing when to push and when to yield, building trust over decades. A radiologist doesn't just read scans—she translates between machine precision and human fear, catches the one-in-a-thousand case that breaks the pattern, provides the human presence that transforms diagnosis from data to meaning.

The skills can be automated. The job—that complex web of judgment, relationships, and context—we have no realistic map for what automation of that job looks like.

PwC's 2025 data reveals this starkly: industries with highest AI exposure show 3x revenue growth and wages rising twice as fast. Why? Because organizations that understand jobs as more than skill-bundles use AI to amplify human capability rather than replace it. Those who see only skills to automate end up like GM—with robots painting each other while market share evaporates.

GM finds out the hard way

Roger Smith spent upwards of $90 billion in the 1980s pursuing the ultimate reductionist dream: decompose car manufacturing into discrete tasks, automate each task, eliminate the workers. The Detroit-Hamtramck Assembly plant opened in 1985 as his monument to this vision.

The reality proved prophetic for our current moment. Spray-painting robots coated each other instead of cars. Welding robots sealed doors shut. The "robogate" systems that were supposed to revolutionize assembly instead created catastrophic bottlenecks whenever they encountered variations outside their programming. GM's market share plummeted from 46% to 35.1% during Smith's tenure.

The bitter lesson came through NUMMI, GM's joint venture with Toyota. Using GM's worst workforce, Toyota achieved world-class quality not through automation but through recognizing that manufacturing jobs involved continuous improvement, problem-solving, and adaptation—human capabilities that no amount of discrete skill automation could replicate. GM had automated the skills but lost the job.

So what sticks if knowledge doesn’t?

In a world of hyperinflated knowledge, what is hard currency? Let me suggest a non-exhaustive list here. I’ve written about a few of these before, but never nailed them down in a single clean list like this. What would you add?

Glue Work: The invisible labor that connects systems, translates between domains, and maintains coherence. The nurse who bridges between AI diagnosis and patient understanding. The project manager who transforms algorithmic outputs into team direction. Undervalued before, essential now.

Taste: In infinite possibility, knowing what to build matters more than knowing how. The creative director choosing from AI's million options. The product manager selecting features when anything is technically possible. Taste can't be automated because it's not a skill—it's accumulated judgment about what matters.

Extreme Agency: The ability to operate with minimal direction, maximal ownership. When AI handles execution, humans must excel at goal-setting, priority-defining, and course-correcting. Agency isn't following instructions—it's knowing what instructions to create.

Learning Velocity: Not knowledge accumulation but adaptation speed. The half-life of technical skills has compressed to 2.5 years. Value accrues to those who learn faster than knowledge inflates, who surf the wave of obsolescence rather than drowning in it.

Intent Horizons: The capacity to maintain coherent goals across extended timeframes. AI excels at optimizing immediate objectives but lacks the ability to balance competing long-term priorities. Humans provide the narrative coherence that prevents optimization from becoming self-defeating.

Interruptibility: The meta-skill of knowing when to stop the machine. Like Toyota's jidoka principle—automation with human touch—value concentrates in those who recognize when systems are failing in ways metrics can't capture.

These aren't skills in the traditional sense. They're ways of being that emerge from the complex intersection of personality, experience, and judgment. They resist the decomposition that makes automation possible.

If we don’t know, what are we?

Here’s the thing: The radiologist's crisis isn't economic—it's ontological. For centuries, we built identity on accumulating knowledge. "I am what I know" was the tacit creed of the professional class. Degrees, certifications, years of experience—these weren't just economic signals but existential anchors.

Knowledge workers report increasing anxiety about professional relevance, imposter syndrome when AI outperforms their core competencies, and fundamental uncertainty about career direction. The psychological impact extends beyond individual identity to social structure. When knowledge no longer confers status, what organizing principle replaces it?

The answer emerging from successful AI adoptions: we shift from "I am what I know" to "I am how I connect, judge, and create meaning from what machines know." This is the world of AI agent managers that Jensen Huang laid out in January of this year. The lawyer who saves four hours weekly with AI while maintaining higher accuracy than either AI or lawyers alone isn't replaced—she's amplified. Her value shifts from information storage to wisdom application.

The paradox of circuit breakers

Financial markets discovered this truth through trillion-dollar near-disasters. Despite algorithms executing 70% of trades, markets depend on human-triggered circuit breakers to prevent catastrophic losses. When the S&P 500 drops 7%, trading halts for 15 minutes—not for algorithms to recalibrate, but for humans to assess whether the selloff reflects reality or algorithmic panic.

March 2020 proved the point: circuit breakers triggered four times in a single month, preventing algorithmic feedback loops from destroying market value. The paradox: as trading becomes more automated, human judgment becomes more critical, not less. The humans don't execute trades—they decide when to stop the machines from trading.

Tesla's Full Self-Driving is a really interesting test of this hypothesis. Tesla’s essential bet is they’ve seen enough edge cases now to be safe on the road. Safer than humans and safe enough to launch Robotaxi. Tesla is probably right that they are safer than people (it’s a low bar), but we humans are likely to remain very intolerant of robot errors in driving—we will absolutely use a double standard here based on patterns of previous investigations. And I have no doubt we will continue to be a majority human driving world for a good long while.

The great miscalculation

Organizations pursuing pure automation make the same error: they see tasks, not systems. They automate skills, not jobs. They replace capabilities, not judgment. The failure rate is predictable and brutal.

IBM Watson Health, sold for $1 billion after $5 billion investment. Google's diabetic retinopathy system, perfect in labs but rejecting 21% of real-world images due to lighting variations. Amazon's AI recruiting tool, scrapped after systematically discriminating against women. Each failed by automating the measurable while failing to measure what mattered.

The successes tell the opposite story. Swedish breast cancer screening combining AI with radiologists detected 20% more cancers while reducing workload 44%. Law firms report AI-augmented lawyers generating $100,000 additional billable time annually. Manufacturing technology investment reached $2.81 billion in 2024, focused on collaborative robots working alongside humans rather than replacing them.

I’m not here to promise you that organizations won’t make this screw-up again. They will. Nor am I here to make the Pollyanna-ish claim that no jobs will be lost to AI. Nor am I trying to say that automation won’t work.

I’m making a subtler point: by framing jobs in terms of systems of skills we are extending 20th century managerial philosophy to the nth degree, and we are going to find out (again) that jobs are more complex than we realize. Work that matters is more complex than we realize. And the skill list I’ve outlined above is a way to start to characterize the world of work beyond the world of knowledge—a description of all the other stuff we do besides know stuff!

The 56% premium

What’s 56%? This: Workers with AI skills command 56% wage premiums—up from 25% a year ago. The real tell? Zuckerberg is now poaching AI researchers for $10 million (or more) packages, not just to build better models but to figure out how to help Meta win the AI race. The premium isn't for AI expertise itself—it's for knowing how to bridge AI capabilities to real-world value.

The World Economic Forum projects 78 million net new jobs by 2030—170 million created, 92 million eliminated—concentrated in roles that leverage AI as tool rather than replacement. McKinsey (I know lol) finds 30% of work hours face automation potential, but actual displacement remains far lower as organizations discover the irreplaceable value of human judgment.

The premium doesn't attach to AI skills themselves—those commodify quickly and also are somewhat ephemeral as AI systems evolve. It attaches to the meta-skill of knowing how to remain valuable and sticky against problems as skills commodify. It rewards those who understand that in a world of infinite knowledge, the scarcity shifts outside knowledge toward other human capacities that can’t be caught by knowing more things.

The choice that defines the next decade

We stand at an inflection point. Not between humans and machines—I think that's a false binary. But between two visions of human worth:

Desperately trying to out-know machines, accumulating credentials in a hyperinflationary spiral—trying to compete for the knowledge prize with machines

Developing the judgment to know when machines are wrong, rigid, or heading toward catastrophe—or more deeply, learning to partner with machines

The first path leads to existential crisis and economic irrelevance. The second leads to a new form of human value—not despite AI's capabilities, but because of them.

The radiologist in Cleveland faces something more complex than a skills crisis. ChatGPT scores higher on empathy tests than most doctors. AI reads scans more accurately. But work isn't individual tasks—it's the bundling of tasks with ownership, liability, interruptability, long-term thinking, and meaning-making within a team and between a team and patients. When the AI misses a tumor, who gets sued? When a patient needs someone to blame, who stands there and takes it?

And it’s not just negative. Can the AI give the patient a hug? Can the AI ask for a second opinion? Can the AI step over and look over a buddy’s shoulder? Does the AI tolerate switching to an entirely different patient history within the same chat?

We can go on and on but more fundamentally: if work is the act of making meaning, and LLMs are what Karpathy calls "stochastic parrots"—spirits that simulate meaning without creating it—we face unforeseen obstacles to getting actual work done. We don’t know what it takes to truly automate work! The gap between an LLM's summary of War and Peace and actually reading War and Peace isn't about information transfer. It's about experience, transformation, the irreducible difference between knowing about something and knowing it.

That's not a job description. It's the difference between simulating human and being human. And maybe that means that the knowledge isn’t the point.

The question isn't whether AI will take your job. It's whether you'll discover what humans are actually for before your knowledge becomes worthless. The clock is ticking, and the currency of expertise is collapsing faster than any of us are comfortable with, and it’s demanding that we cultivate a much wider range of skills than most of our educational systems prepared us for. It’s not easy.

But maybe—just maybe—that's exactly when we discover what being human was always supposed to mean. I know it’s cheesy but I’ve seen people go through this reflection stage individually in journeys over the past few years, and I think it’s a real post-AI human realization moment. Call it post-AI midlife crisis if you like.

And right on cue, we have the biggest objection to all this:

Yes, but what about AGI?

"Why prepare for a human-AI hybrid future when AGI will make us all obsolete in a year anyway?"

Here's the honest answer: that’s probably not a correctly framed question.

Annoying I know. But it matters.

AGI (maybe?) might be achievable with scaled LLM architectures. Intent horizons are doubling every few months—from 3 hours to 7 to potentially 30. Memory implementations get more sophisticated daily. In a year or two, these systems might maintain coherent goals for weeks or months. Things will get smarter in jagged ways.

But here's what's becoming clear: as LLMs scale brilliantly on the knowledge dimension, we have no clear picture of how they're scaling on the dimensions that actually matter for getting work done. They're building a ladder to the moon while we need bridges between islands.

The core issue isn't whether they'll achieve long-term memory—they probably will. It's whether that memory will have the magical intuitive flexibility that makes human minds special. Current implementations are like lossy JPEGs that sometimes hallucinate what was in the compressed bits: they can retrieve the gist, miss crucial details, and occasionally invent things that were never there. When you need the exact contract clause or the specific drug interaction, "mostly right" isn't right.

I’m not clear if the direction we’re going is the correct direction to address some of these fundamental capabilities gaps with humans, and what I’ve seen so far doesn’t give me the impression we’re making very fast progress on some of the human skills I outlined above. (Most humans breathe in relief here lol)

Another example: LLMs excel at going deep within domains but struggle at the boundaries where real work happens. They can generate brilliant code within a well-defined problem space but miss when the technical challenge has become organizational. They can write perfect legal briefs but not recognize when the legal strategy needs to become a business negotiation. This isn't a bug—it's the difference between knowledge and judgment.

Which brings us to what matters: in a world where knowledge can be instantly accessed and credentials can be faked with a ChatGPT account, what constitutes genuine proof of work?

Really excellent software that actually ships and works. You can't fake a codebase that handles real users, real scale, real edge cases. The gap between "demo that impresses in an interview" and "system that survives production" can't be bridged by prompting. It requires the thousand small decisions, trade-offs, and intuitions that come from genuine experience.

Writing that changes how people think. Not just grammatically correct prose or well-structured arguments, but writing that creates new mental models, that makes readers see the world differently. AI can imitate style but can't generate the lived experience and unique perspective that makes writing resonate at a deep level.

Successful cross-functional projects. Anything requiring navigation across technical, business, and human domains. The project manager who ships a feature by aligning engineering, design, sales, and legal isn't just coordinating—they're translating between incompatible worldviews, maintaining coherence across context switches that break AI systems.

Building and maintaining trust networks. Reputation that accumulates over years through consistent judgment calls. The venture capitalist whose portfolio succeeds not through any single brilliant insight but through thousands of micro-decisions about people and possibilities. This can't be speedrun or simulated.

Cultural creation and curation. The creative director who consistently identifies what will resonate before it's obvious. The editor who develops writers. The A&R person who finds artists before they break. Taste that predicts and shapes culture rather than following it.

High-stakes decision-making under uncertainty. The surgeon who recognizes when to deviate from protocol. The pilot who safely lands a damaged plane. The CEO who navigates a crisis. Situations where judgment must integrate incomplete information, competing priorities, and irreversible consequences.

The pattern? These are all outputs that emerge from the messy intersection of knowledge, experience, judgment, and human connection. They're what remains when pure information processing becomes commoditized.

Anyway, there's something deeper here. If work is fundamentally about making meaning—not just processing information but creating significance—then we face an unexpected obstacle. LLMs are brilliant at simulating meaning, generating text that feels profound, responses that seem empathetic. They're stochastic spirits that can mimic understanding perfectly. But mimicry isn't meaning. The difference between an AI's summary of War and Peace and the experience of reading it isn't about information—it's about transformation, about being changed by the encounter.

Work bundles tasks with ownership, liability, and the human act of meaning-making. When AI makes a medical error, who owns it? When a project fails, who takes responsibility? When success happens, who finds meaning in it? These aren't technical problems but existential ones. They can't be solved by better algorithms because they're not about processing—they're about being.

We're watching the greatest shift in human value since the industrial revolution. Not because AI will replace humans, but because it forces us to identify what was always most valuable about human work: not the knowledge we store but the connections we make, not the problems we solve but knowing which problems matter, not executing tasks but navigating the undefined spaces between them.

The timeline question misses the point. Whether AGI arrives in 2025 or 2050, the humans who thrive will be those who understand that in a world of infinite knowledge, value concentrates in judgment, taste, and the ability to navigate discontinuity. The clock isn't ticking on human obsolescence—it's ticking on our willingness to recognize what makes us irreplaceable.

Ok and one last question for the road…

What about college?

On June 24, 2025—just days ago—Monster and CareerBuilder filed for Chapter 11 bankruptcy. These titans of the early internet, who once bought Super Bowl ads to revolutionize job hunting, collapsed under $100-500 million in debt. Their demise isn't just another tech casualty. It's the canary in the coal mine for our entire credentialing system.

Since Oxford's founding—teaching existed there by 1096, though the university rapidly developed from 1167—universities have served as civilization's knowledge gatekeepers. For nearly a millennium, the path was clear: accumulate knowledge, earn credentials, trade them for economic and social position. This system survived the printing press, the industrial revolution, and the internet. It won't survive AI.

The numbers are staggering, even if contested. Surveys show anywhere from 30% to 89% of college students using ChatGPT for homework, with most estimates hovering around 40% for regular use. Regardless of the exact figure, one educator's observation rings true: this is education's "Lance Armstrong moment." When enough players cheat with high upside and low consequences, others feel forced to cheat to compete. It becomes, in Armstrong's words, "impossible to win without doping."

But here's the deeper crisis: if knowledge is now free and instant, what exactly are universities selling? Not information—ChatGPT provides that. Not skills—YouTube tutorials teach those. Not even critical thinking—AI can simulate that too. They're selling something increasingly abstract: the idea of credibility in a world where credentials can be faked with a prompt.

The job market reflects this confusion. Nearly half of companies say they plan to eliminate bachelor's degree requirements, yet paradoxically, 59% of employers say degrees matter MORE than five years ago. We're watching a system in violent transition, unsure whether to double down on traditional credentials or abandon them entirely.

This isn't just about cheating or job requirements. It's about the collapse of an entire social technology. Degrees served as universal signals—imperfect but shared fictions that enabled coordination. An MBA from Wharton meant something specific. Ten years at McKinsey conveyed particular competencies. Now these signals are noise. You can fake the knowledge, simulate the skills, even mimic the writing style. What can't be faked?

The answer emerging from forward-thinking educators and employers: proof of work that demonstrates judgment, not just knowledge. Ship code that handles real users. Write something that changes how people think. Navigate complex projects across domains. Build trust networks over years. Create culture rather than consume it. Make high-stakes decisions where failure has real consequences. Yes there’s an on-ramp here, but the ideas are there to change how we show value and that’s important.

These outputs resist AI assistance not because they're technically difficult but because they require ownership, liability, and the human act of meaning-making. When an AI-written essay fails to persuade, who takes responsibility? When generated code crashes in production, who fixes it at 3am? When a decision goes wrong, who stands before the board?

Universities that survive will transform from knowledge-delivery systems to judgment-development institutions. They'll teach not what to think but how to think when infinite information is available. They'll credential not information retention but the ability to navigate discontinuity, own outcomes, and create meaning from noise.

The students cheating with ChatGPT aren't lazy—they're rational actors in an irrational system (I think Roy and Cluely agree with me on this one thing lmao). They're using 21st-century tools to game 19th-century assessments for 11th-century credentials. The real scandal isn't that they're cheating. It's that we're still pretending the old game matters.

Monster's bankruptcy filing listed the cause as a "challenging and uncertain macroeconomic environment." But that's corporate speak for a simpler truth: when knowledge becomes worthless, the infrastructure of knowledge-trading collapses too. First the job boards. Next, perhaps, the universities that feed them.

Unless they remember what they're actually for!

Good luck out there. Stay Curious. Stay human.

Sources

Monster/CareerBuilder Bankruptcy Sources

Washington Post – CareerBuilder, Monster File for Bankruptcy

Staffing Industry Analysts – CareerBuilder, Monster Selling Businesses

North Bay Business Journal – CareerBuilder, Monster Bankruptcy

AI/ChatGPT in Education Sources

Employment/Hiring Trends Sources

Association of Public and Land-grant Universities – Employment and Earnings

Bureau of Labor Statistics – Employment Situation Report (PDF)

Contemporary AI Failures (McDonald’s, Tesla, etc.)

Restaurant Business Online – McDonald’s Ending Its Drive-Thru AI Test

AP News – McDonald’s Ends AI Drive-Thru Partnership with IBM

CNBC – Tesla Faces NHTSA Investigation After Fatal Collision

Washington Post – NHTSA Investigates Tesla’s Full-Self Driving System