I’ve run office hours with hundreds of people over the past year—Fortune 500 teams, independent builders, consultants trying to scale their practice with AI.

And I kept hearing the same frustration, just wearing different faces:

“I don’t understand why this isn’t working. I feel like I’m being clear.”

“I’ve tried those fixes for hallucinations, and look they’re not working!”

“I keep rephrasing the prompt and it’s not changing anything.”

It gradually dawned on me: there are a class of problems in AI use that are sticky and resist initial attempts to solve. They tend to persist, and that’s what pushes people to come to office hours with me.

And what’s interesting is I kept seeing the same problems in different disguises over and over again. After looking across my notes, I found six recurring problem types that popped up for engineers and non-coders alike. So I did a bit of digging, and it turns those 6 are showing up everywhere—including in a study across 29,000 forum questions on the OpenAI website.

Now this gets interesting, because we have essentially six LLM behavioral ‘diseases’ (for lack of a better term), and all of them are hard to treat at first pass.

My task was to figure out a battery of sophisticated treatments that you can quickly and easily (like copy-paste) apply as a non-coder OR as an engineer to maximize your odds of success against these persistent issues.

Also, I had to name them! Some of them are known villains (hello hallucinations) and others are not widely discussed, but they all needed clear names in order for us to have a conversation and make progress together.

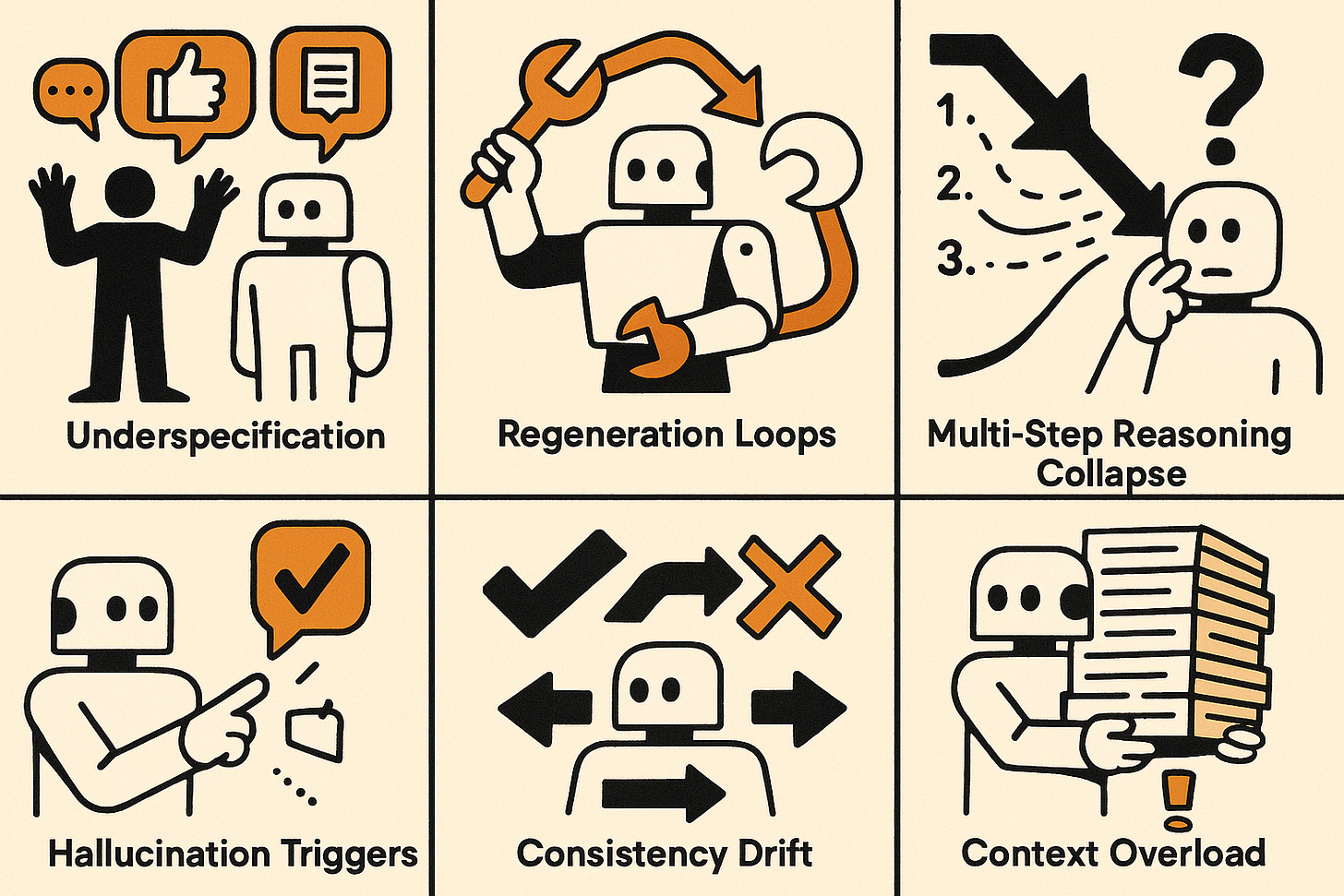

Here are the six problems:

Under-specification — When more (or less) words can BOTH hurt

Regeneration Loops — When touching it makes the tower fall down

Multi-Step Reasoning Collapse — When it says it thinks and it f*ing lies

Hallucination Triggers — The nasty causes of hallucinations

Consistency Drift — When you can’t get the same result twice

Context Overload — Adding all the words was a BAD idea

Visuals help, and I even made a little cartoon to help you visualize these guys:

Now, what are we gonna do about it?

Here’s what I’m thinking:

I built you a complete treatment plan for each disease. Not a single fix—a multi-layered approach that works whether you’re typing in ChatGPT or building with the API.

For each of the six problems, you get:

The Chat Fix — A prose template you can copy-paste into any chat interface today. No coding, no setup, super easy.

The Advanced Fix — JSON schemas and API implementations that enforce the rules programmatically. For engineers building tools or automating workflows, these put bowling ball bumpers up to protect your prompts in production.

Real Examples So You Can See It Work— Every fix includes a range of concrete scenarios: product emails, vendor selection, customer research, code debugging, weekly reports, contract analysis. You see the exact prompt structure applied to actual work, not generic “write me a poem” examples.

Good Signs / Bad Signs Diagnostics — Checklists that you can watch to tell you if your fix worked. “First draft is 80%+ there” vs “Still getting wrong length”—observable signals so you’re not guessing whether you solved it.

The Twist You Didn’t See Coming — Each problem includes digging into WHY models behave this way. Sort of a root cause of the disease, if you will. These aren’t tips—they’re the structural reasons the problem exists.

Plus: The Meta-Diagnostic Prompt — One master prompt that analyzes your broken prompt against all six problems, tells you which one(s) you’re hitting, and gives you the specific fix to apply. It’s like having the pattern recognition from my office hours in your pocket.

The Full Arsenal:

Really clear explanation of WHAT the heck these are in a way you can remember

25 working doctor prompts (baseline + advanced for each problem)

Decision frameworks that replace guessing with structure

Simplified, actionable guidance on advanced techniques that fix these issues: preservation boundaries, confidence requirements, validation gates (think of these as easy ways to get the fancy medicine)

Clear explanations of which new AI features (structured outputs, reasoning controls, verification fields) actually solve which of the 6 problems

This isn’t “try these tips and hope.” It’s a diagnostic system with lots of proven treatments for each of these problems.

When something breaks, you match it to one of six patterns, apply the corresponding fix, verify it worked with the checklist.

Nate, how hard is this really? I buy that these are real issues, but they’re HARD.

It’s totally solvable! I’ve solved all 6 in my own chatbot. I’ve solved them with agents in the API. And once you get the hang of it, you can solve it too.

And that’s why this post is all about giving you LOTS of examples to get the hang of the solution pattern so it’s easy to apply. My goal is for you to have a whole kit of tools to go after these issues when they arrive.

A long long time ago I worked in tree care, and one of the worst things you see as an arborist is a tree with fungi. It’s hard to treat, it’s a systemic issue, and it takes multiple approaches to address. Well, same here!

With some of these persistent issues it can feel like your ‘tree’ (your AI chatbot) has a disease. As I like to say: Don’t panic! My goal is to give you the tools to get healthy and get back to a productive and collaborative AI-human relationship.

Happy (and healthy) prompting, from the prompt doctor.

Listen to this episode with a 7-day free trial

Subscribe to Nate’s Substack to listen to this post and get 7 days of free access to the full post archives.